Electronic Records Management Guidance on Methodology for Determining Agency-unique Requirements

|

ATTENTION! This product is no longer current. For the most recent NARA guidance, please visit our Records Management Policy page. |

Electronic Records Management (ERM) Initiative

November, 2005

Recommended Practice: Evaluating Commercial Off-the-Shelf (COTS) Electronic Records Management (ERM) Applications

Enterprise-wide records and document management in an information-intensive organization is a complex undertaking. Identifying a Commercial Off-the-Shelf (COTS) system which meets an organization's needs can be a daunting task. In approaching this challenge, it can be helpful to understand how other organizations have tackled this effort. While no single organization's experience can possibly identify the myriad issues which can arise, it can provide valuable information on how to get started and issues that should be considered.

This document summarizes the Environmental Protection Agency's (EPA) experience identifying the COTS products that would best meet the needs of agency staff for both Electronic Document Management (EDM) 1 and Electronic Records Management (ERM) 2 functionality and has been informed by review from partner agencies in the E-Gov ERM Initiative. We hope this document can be used as a case study as other organizations move forward in examining their requirements and identifying systems to evaluate. It should be used in conjunction with existing Office of Management and Budget (OMB) policies in OMB Circulars A-11 and A-130, and in other OMB guidance for managing information systems and information technology (IT) projects, and with other NARA records management regulations and guidance.

The goal of this document is to provide practical guidance to federal agency officials who have a role in the selection of enterprise-wide ERM systems; a subsequent document will deal with developing and launching an ERM pilot project. This document is composed of five sections, followed by a Glossary, and two Appendixes:

- Introduction

- Application of This Guidance Document

- Evaluating COTS Software (Methodology)

- Step One: Analyze existing requirements

- Step Two: Develop a manageable set of high-level criteria and scoring guide

- Step Three: Gather information about each product

- Step Four: Evaluate COTS against criteria and score each product

- Step Five: Determine how the top three COTS solutions match your agency's specific requirements

- Step Six: Present analysis and recommendation to governance or decision-making body.

- Step One: Analyze existing requirements

- Lessons Learned

- Summary

APPENDIX A: Overview of Steps Leading up to Evaluation of COTS Requirements

APPENDIX B: Criteria Used by EPA for COTS Evaluation

APPENDIX C: Resources for the Evaluation of Commercial Off-the-Shelf (COTS) Software

1. INTRODUCTION

The strategic focus of the Office of Management and Budget's (OMB) Electronic Government (E-Gov) Initiatives is to utilize commercial best practices in key government operations. The National Archives and Records Administration (NARA) is the managing partner for the ERM E-Gov Initiative. NARA's ERM Initiative provides a policy framework and guidance for electronic records management applicable government-wide. NARA's ERM Initiative is intended to promote effective management and access to federal agency information in support of accelerated decision making. The project will provide federal agencies guidance in managing their electronic records and enable agencies to transfer electronic records to NARA.

This practical guidance document is one of a suite of documents to be produced under NARA's ERM Initiative. These documents form the structural support for ensuring a level of uniform maturity in both the Federal government's management of its electronic records and its ability to transfer electronic records to NARA.

This is the third of six documents to be produced under the Enterprise-wide ERM Issue Area, providing guidance on developing agency-specific functional requirements for ERM systems to aid in the evaluation of COTS products. The first document provides guidance for Coordinating the Evaluation of Capital Planning and Investment Control (CPIC) Proposals for ERM Applications 3 and the second, Electronic Records Management Guidance on Methodology for Determining Agency-unique Requirements 4 , offers a process for identifying potential ERM system requirements that are not included in the Design Criteria Standard for Electronic Records Management Applications, DOD 5015.2-STD (v.2). This document is issued as a recommended practice or practical guidance to assist agencies as they plan and implement ERM systems.

Subsequent documents will consist of advisory guidance for Building an Effective ERM Governance Structure, developing and launching an ERM pilot project, and a "lessons learned" paper from EPA's proof of concept ERM pilot as well as other agencies' implementation experience. Based on EPA's experience with and learning from the development and implementation of its own electronic records and document management system, the guidance documents are aimed at helping federal agencies understand the technology and policy issues associated with procuring and deploying an enterprise-wide ERM system.

2. APPLICATION OF THIS PRACTICAL GUIDANCE DOCUMENT

This practical guidance is meant to help agency staff effectively identify and assess ERM systems capable of managing the electronic records that an agency must maintain to comply with legal mandates, recommending appropriate applications for agency-wide use. While many agencies have established records management systems for retaining and retiring paper records, many do not have electronic systems to assist individual staff members in their day to day creation, management and disposition of electronic records, including e-mail. 5 The document summarizes the steps taken by EPA as it considered the COTS systems that would meet the specific requirements for its agency-wide ERM effort. 6 While this document is based on the specific experiences of EPA (i.e., a pairing of an Electronic Document Management System (EDMS) and a Records Management Application (RMA), the principles included herein could be used to evaluate COTS RMA in a variety of other implementation scenarios - e.g., RMA coupled with other types of COTS application such as an Enterprise Content Management System (ECM).

The primary audiences for this document are the officials, teams, and work groups charged with the task of selecting an ERM system. It is meant to help agencies develop criteria important to the selection of COTS products, and a method for weighting the criteria. As with other IT systems, agencies must adhere to OMB policies and guidance when planning for and selecting an ERM system. These policies are articulated in OMB Circular A-11, Preparation, Submission and Execution of the Budget 7 andOMB Circular A-130, Management of Federal Information Resources. 8 Additional OMB guidance is found in OMB Memorandums (see http://www.whitehouse.gov/omb/memoranda/index.html)

This practical guidance was borne out of the experience of federal agency managers whose aim was to have one enterprise-wide system that could accommodate records and document management, as well as E-FOIA requirements, and who needed to evaluate potential product solutions. It focuses on the identification and selection of COTS products to meet the needs of an agency. Implementation of portions of this guidance may be facilitated by third-party analytical services.

The process described in this document provides insight into the evaluation of products that meet an agency's requirements for an ERM system and perform effectively within the agency's environment. In an agency such as the EPA, with its relatively independent operating units, the process to identify and select products was inclusive. How much of this process you choose to adopt for your Agency's ERM initiative can be affected by many variables, such as:

- The size of the agency

- Its approach to technology and ERM (centralized, decentralized, or distributed)

- Its existing technology infrastructure (as well as anticipated changes in the information architecture)

- Availability of IT personnel

- The skill sets required for development of an ERM operational strategy and ultimate deployment.

Based on this information, the methodology can be modified to better suit the particular needs and concerns of your organization.

Before you determine your agency's requirements for an enterprise-wide ERM system, you must assess its particular needs for automating the records management process. Appendix A provides a helpful overview of what should be considered for inclusion in the system and the steps that must be accomplished.

3. EVALUATING COMMERCIAL OFF-THE-SHELF (COTS) SOFTWARE (METHODOLOGY)

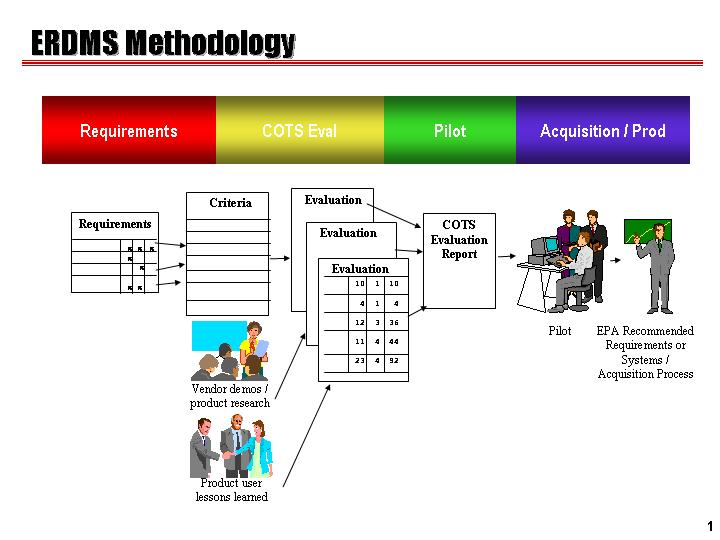

An agency endeavoring to implement enterprise-wide ERM should consider the following steps when performing a COTS software evaluation to identify and assess products that will meet the requirements and perform effectively within its particular environment. The time required to complete each step will vary with the size of the agency, complexity of the project, availability of staff to participate, and the number of products to be evaluated. For EPA, the entire process required nine months, as depicted below.

Figure 1 provides an overview of the ERM development process.

Figure 1. Overview of the ERM Development Process

Step One - Analyze existing requirements

The first critical component for Step One is involvement of agency staff in the identification of existing requirements. Appropriate staff to involve may include: users who are policy makers and legal counsel; administrative and scientific users; records managers; and technical staff. Ensure appropriate geographic representation in the user groups and evaluation team. The more varied the responsibilities of the individuals consulted during the requirements analysis, the more comprehensive and accurate the set of criteria developed will be. This will yield an ERM system that better meets the needs of users, assuring usage by those who had input to the initial stage of development.

Begin the COTS software evaluation with an analysis of your agency's requirements for managing documents, records, and E-FOIA. These "functional requirements" are critical to determining which COTS products should be evaluated. The following resources can be helpful:

- The NARA-endorsed revised Department of Defense's 5015.2-STD, Design Criteria Standard for Electronic Records Management Software Applications (released June 2002). This standard provides a baseline for ERM applications to manage electronic records, and NARA policy is that agencies should employ a 5015.2-certified application. Then, determine if your agency has additional requirements.

- The second guidance in this suite, Electronic Records Management Guidance on Methodology for Determining Agency-unique Requirements (August 23, 2004), provides a process for identifying potential ERM system requirements that are not included in the Design Criteria Standard for Electronic Records Management Applications, DOD 5015.2-STD (v.2) ( http://www.archives.gov/records-mgmt/policy/requirements-guidance.html).

- Draw on analyses (e.g., for electronic records, documents, or FOIA) from within your organization or other federal agencies. These analyses may have disparate requirements which need to be considered. They may also be out-of-date and need to be updated.

- Professional and standards organizations are another source of ERM requirements. Their standards and reports will help you develop a comprehensive list of functionality. Some of the organizations to consult include the Association for Information and Image Management (AIIM) for such publications as Requirements for Document Management Services Across the Global Business Enterprise, April 1999; the American National Standards Institute (ANSI) Framework for Integration of Electronic Document Management Systems and Electronic Records Management Systems (ANSI/AIIM TR48-2004); and ARMA (formerly the Association of Records Managers and Administrators, now ARMA International), DOD 5015 Review Task Force comments to DoD, December 1999. 9

- The Service Component Reference Model (SRM) of the Federal Enterprise Architecture "is a business and performance-driven, functional framework that classifies Service Components with respect to how they support business and/or performance objectives. The SRM is intended for use to support the discovery of government-wide business and application Service Components in IT investments and assets. The SRM is structured across horizontal and vertical service domains that, independent of the business functions, can provide a leverage-able foundation to support the reuse of applications, application capabilities, components, and business services." Service domains that are of particular use for ERM applications include Customer Services, Process Automation, Digital Asset, Back Office, and Support. For more information about the SRM, consult http://www.whitehouse.gov/omb/egov/a-4-srm.html.

- Case studies can also help to identify ERM requirements. Augment the basic requirements with your knowledge of industry practice and through interviews with other federal agencies performing ERM. This can be accomplished by referencing the literature for case studies and other "how to" guides.

- Benchmarking studies, even those conducted by a vendor, can remind you of functionality you may wish to seek from an ERM system. (For example, Doculabs' 1998 Records Management Benchmark Study outlines the critical categories that are important to consider when evaluating records management technologies.)

- Talking to colleagues at other federal agencies who may have undergone a similar exercise and have valuable experiences to share.

In addition, you will have other technical and programmatic requirements, such as Section 508 or interoperability with other systems, than should be addressed as part of the COTS evaluation. You also should evaluate your agency's existing systems and their functionalities to determine how a selected COTS application will be integrated into existing, related systems.

Combine requirements into a master table, functionally organized into categories (e.g., capture, workflow, storage, search and retrieval, redaction, publishing, general, management, and system) grouping requirements common to different components of the system (e.g., EDM, ERM, and E-FOIA). This breakdown will also facilitate the evaluation of COTS tools for their functionality across multiple components.

Outcome: You have now identified the functional requirements that exist in your agency. These will be further refined during Step Two of the COTS Evaluation and form the foundation on which you will build your ERM product analysis.

Step Two - Develop a manageable set of high-level criteria and scoring guide

The purpose of Step Two is to help you take the requirements you have assembled in Step One and create a manageable set of high-level criteria important to the selection of the products. Weighting the criteria and creating a scoring mechanism will allow you to rank the products selected for evaluation.

Mapping the detailed functional requirements developed in Step One to high-level criteria. Mapping the numerous, detailed requirements to more general criteria will minimize the level of effort needed for the product evaluations. For example, a requirement such as "ability to set milestones in the workflow," and "the ability to set conditional routing in the workflow," can both be mapped to the criterion "Workflow." A description of the evaluation criteria used by EPA is presented in Appendix B.

Aggregating these high-level criteria into categories will further expedite the process. As an example, EPA identified 451 requirements. These were mapped to 23 criteria aggregated into five categories (Functional Requirements, Integration Requirements, Technical Requirements, Deployment, and Market Presence). Figure 2 depicts the process for mapping requirements to product evaluation criteria.

Figure 2. Mapping Requirements to Product Evaluation Criteria

Weighting the high-level criteria to reflect the relative importance of the functions they represent will make the product evaluations more meaningful and easier to compare one product to another. At EPA, members of the RMA Steering Committee were asked to assign a total of 100 points to the five criteria categories (Functional Requirements, Integration Requirements, Technical Requirements, Deployment, and Market Presence.) Next, committee members were given 100 points to distribute to the criteria within each category. Their results were combined to calculate an average weighting.

Enterprise-wide ERM functionality is so essential to the project that agencies may choose to assign a mandatory criteria level for this criterion. Also identify any other criteria that are mandatory. It is necessary to have the results of this weighting process approved by the governance board before the evaluation begins.

Developing questions related to the criteria will aid in product evaluations. Often, requirements will be expressed in general terms. For example, "System shall provide a web interface." However, the requirement does not specifically indicate what this means. Questions developed concerning the strength of a web interface will help to clarify what is meant by the requirement, both for the agency and the vendor. EPA project team, in consultation with a consultant, developed a series of questions for each criterion to be sent to vendors. Alternatively, these questions may be posed during vendor demonstrations. Examples of questions supplementing specific criteria are provided in Figure 3.

Establishing numeric values to assess how products meet each criterion (e.g., 0=not met, 1=low, 2=medium, 3=high, 4=exceptional) will allow each product to be evaluated against each criterion.

Designing a guide to scoring the product solutions for each criterion-for example, by assigning a value to each question, contributing to the total value of the criterion-will permit a weighted score to be computed for each criterion (by multiplying the weight for a particular criterion to the score assigned during the product evaluation phase of the project). This weighted score will determine the solution rankings. Figure 3 presents a sample page from a COTS Scoring Guide, illustrating the values assigned to questions developed to enrich the evaluation.

Figure 3. Sample page from COTS Scoring Guide for Web-based Criteria

Outcome: You now have a manageable set of high-level criteria by which to evaluate your ERM product solutions, weighted to reflect the relative importance of the functions they represent, and a guide by which to score the products. Step Three will help you determine which product solution to include in your evaluation.

Step Three - Gather information about each product solution

If the key to success in Step One was involving a diverse group of agency staff in the identification of existing requirements for ERM, the success factor for Step Three is employing a variety of mechanisms to gather the information that will be required to evaluate product solutions against the criteria.

Identifying COTS products. You will want to begin by identifying COTS ERM products. While many listings are available, a good place to start would be those products that meet DoD 5015.2-STD certification. The Joint Interoperability Test Command (JITC) performs testing of RMA products for compliance with DoD 5015.2-STD. Products that have been successfully tested are listed in the compliant Product Register along with vendor and product information. More information on the DoD 5015.2-STD, the certification process, and the Product Register is available at http://jitc.fhu.disa.mil/recmgt/index.html. Another valuable technique for identifying sources of COTS products is a Request for Information (RFI). RFIs can generally generate a lot of vendor interest in your project.

Initial screening. Establishing a set of requirements that ERM solutions need to meet in order to be considered for further evaluation is the first step in product examination. These minimum requirements, a subset of the COTS product evaluation criteria, will establish a threshold for products to be considered. Vendors that demonstrate competencies during the initial screening should be asked to provide a demonstration to review specific software functionality and to answer additional technical questions. These initial vendor screenings will limit evaluation to the best possible field of candidates.

Some requirements may be suspended as a result of the initial screening because they too severely narrow the pool of potential solutions. When contacted, some vendors may choose not to participate in the evaluation, further reducing the number of COTS products included in the evaluation.

As part of either the initial screening or the initial briefing, you will want to gather information on the vendor's ongoing responsibility for updating software, the integration of new versions as they are issued, and how this whole process will be handled in the future

Vendor demonstrations. Vendor demonstrations can be performed in stages, each with its own purpose.

Initial briefing. In preparation for the initial briefing, a generic project overview should be created and sent to the vendors. The objectives of the initial briefings are to:

- Provide company overview, history, and product philosophy

- Provide a basic understanding of product strengths

- Provide a basic understanding of how the products integrate with ERM, EDM, and E-FOIA systems, as your project dictates

- Provide an indication of company performance and ERM experience and projects

- Create benchmarks so that the solutions can be comparatively scored.

Technical briefing. In preparation for the technical briefing, a written set of questions should be sent to the vendors for response. The objectives of the technical briefing are to demonstrate specific software functionality and answer additional technical questions.

Follow-up demonstration. In preparation for the follow-up demonstration, scenarios should be sent to the vendors. The objectives of the follow-up demonstrations are to expose the agency to potential ERM products for a pilot, providing an opportunity to ask questions of vendors and verify analysis, and compare and contrast ERM products.

TIP: Ask each vendor how it employs its product to manage its own company's information.

Product literature review. Review of technical literature plays an important role in product evaluation. Sources for technical literature include brochures, technical specifications provided by the vendors; white papers provided by the vendors and independent parties; vendor product websites; association websites; and the Department of Defense Joint Interoperability Test Command (JITC) RMA Certification website ( http://jitc.fhu.disa.mil/recmgt/index.html).

Federal agency user interviews. Use interviews with federal agency users who have firsthand experience with ERM or who are in the planning stages for acquiring ERM systems to gather generic lessons learned in transitioning or piloting electronic records management, and technical insights on specific ERM systems.

Outcome: You have now identified the COTS products you will evaluate and have amassed the information you need to perform a thorough evaluation (Step Four in this COTS Software Evaluation).

Step Four - Evaluate COTS products against criteria and score each product

The purpose of Step Four is to evaluate COTS software, based on the criteria established and the questions developed in Step Two, creating product scores. Product scoring will permit comparisons on a variety of functional requirements. In this respect, presentation (that is, the creation of tables that can easily be read and understood) is critical.

First, create a product profile for each solution, summarizing the technical information you have gathered in Step Three. In addition to basic vendor information, present the technical information by the categories into which the criteria have been organized. In the case of EPA, there were five: Functional Requirements, Integration, Technical Requirements, Deployment, and Market Presence.

Using the technical information you have collected in Step Three, and the numeric values and guide to scoring product solutions for each criterion established during Step Two, calculate scores for every product against each criterion. Note that few will reach the highest scoring in terms of any functionality. You will want to reserve the high end of the scoring range (typically, 3.5 - 4.0) for exceptional functionality where a vendor goes well above what was required.

A weighted score can be computed for each criterion by multiplying the weight assigned to each criterion in Step Two by the product score. Totals for each product can be computed for both the raw and weighted scores by adding the scores for each criterion. These can be presented in a convenient table appended to each product profile, as illustrated in Figure 4.

Figure 4. Sample COTS Product Scoring Profile

Compiling all of the scores, raw and weighted, will allow you to rank products by their total (raw + weighted) scores. Figure 5 shows raw (unweighted) and weighted scores for ERM solutions in this evaluation; values presented in this table are for illustrative purposes only.

Figure 5. ERM Solutions Criteria Scores

Depending on the number of criteria and products involved in your evaluation, this table can be difficult to digest and read. To make it more manageable, each of the categories can be assessed separately to see how it addresses your agency's overall strategy for an ERM system in terms of categories.

Figure 6 shows the percentage of total possible score each product received for each category. For example, if Product X received a total raw score of 5.61 out of a possible 6 for Functional Requirements, this would equate to a percentage score of 94% for this category. Rankings (high, medium, and low) can be applied to the category percentages and color coded:

- High for over 90, highlighted in green

- Medium for percentages between 75 and 90, highlighted in yellow

- Low for less than 75, highlighted in red.

Those products represented primarily in green would be worthy of consideration. Figure 6 shows strategy weighted product score percentages for major categories of evaluative criteria. These categories are used for illustrative purposes only; your assessment may yield other functionality requirements aggregated into different, higher-level criteria categories.

Figure 6. Strategy Weighted Score Percentages

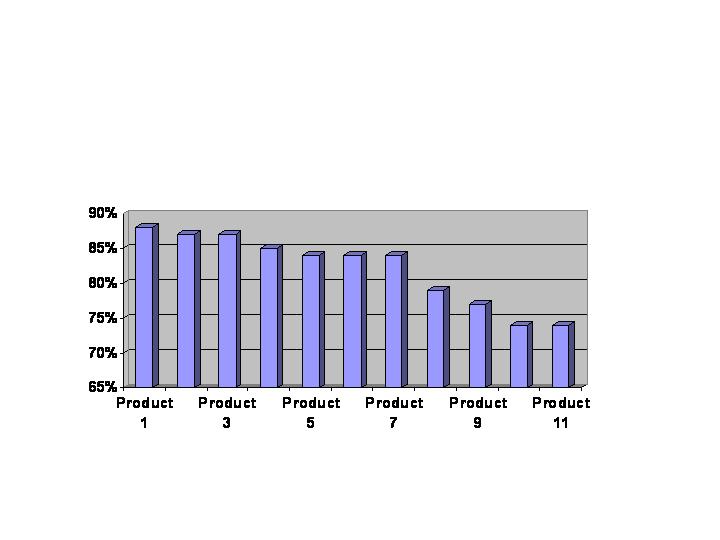

Figure 7 shows the relative scores of the ERM solutions reviewed during the evaluation, illustrating the systems that best meet the agency requirements.

Figure 7. Percentage of Total Possible Weighted Score

Outcome: You now have a profile for each COTS product that has passed the initial screening process and tables that provide a comparison for each product by the criteria you have developed based on your agency's ERM requirements. Using these, you will be able to identify the top COTS solutions with functionality matching your organization's requirements. The next step is to consider what you will want to tell a governance or decision-making body about those products, their strengths and weaknesses, and their ability to integrate into your agency.

Step Five - Determine how the top three COTS solutions match your agency's specific requirements

Having scored and ranked several COTS products, you are now ready to focus on the solutions that are the most likely candidates for acquisition. The purpose of Step Five is to provide new insight into the top COTS solutions, comparing and contrasting them against criteria that may not have been emphasized in your previous analyses. All of this work is in preparation for presenting the ERM product analysis and the solution recommended for your agency to a Governance or Decision-Making Body.

Create a table that provides detailed comparison of the top product solutions. The higher scoring products will all work well within your agency, but the success of ERM will ultimately depend on system implementation. The criteria you select to present in this table should reflect the needs your agency valued most highly during the requirements assessment (Step One) and criteria weighting (Step Two), as well as other criteria that will make the system easy to use and increase acceptance, agency-wide. This can be helpful as you determine how well the system will fit into your organization's environment.

Your COTS software evaluation must identify and assess products that would meet the requirements of the agency staff and mission, and perform effectively within its environment. Consider the following as you narrow the product selections toward a recommendation:

- What is the extent to which your agency's staff collaborate, building on the work of others?

- Is the product compatible with the current technical environment (as well as anticipated changes in that environment)?

- Will the product integrate with or replace legacy document and records systems? The success of an enterprise system will be largely based on its ability to integrate with or replace existing legacy document and records systems. Otherwise, it will simply be yet another isolated, stovepipe system with limited value.

- Will the product work within your agency's infrastructure (to ensure interoperability with the network and peripherals in place today, as well as anticipated changes to the architecture)?

- Since few COTS products function ideally directly "out of the box," will the vendor be willing to modify the "out of the box" solution (and/or the product be capable of modification), at little or no additional cost, to more perfectly fit the agency's unique operating environment?

- Can your information technology (IT) staff support the system? Do you have the personnel required to implement and maintain an enterprise-wide ERM system in terms of numbers of staff and skill sets required? Will you have the authority to hire additional personnel or purchase assistance for support from the vendor, if available?

- Does staff have the level of knowledge and training required to use system? These are time and money issues that will factor in to the final product selection decision.

- Has a part of your organization already invested in any of the top three production solutions? An existing relationship with one of the product vendors or existing licenses purchased will not only affect the cost of a production solution but the time it will take to acquire and implement the system. Personnel may already be familiar with the product, saving additional effort on the part of IT staff (during installation, integration with other systems, and for ongoing maintenance) and/or users (who will not have to learn a new system). Valuable knowledge concerning the product may already exist in your agency and should be tapped.

By now, you have collected quite a bit of information about each product and created several tables that highlight different aspects of the products with regard to specific sets of criteria. Each of the solutions will have its strengths and weaknesses. The higher scoring products in the evaluation will all work well within your agency, but the success of the ERM system will ultimately depend on its implementation. The solution must be integrated to support the agency's business processes and configured to meet the needs of agency staff.

Outcome: Based on your analyses, identify the product that best meets your agency's business needs and will work best in its environment. The final step is to determine what you will need to convey to the Governance or Decision-Making Body and how you will convince its members that the solution you have chosen is the best option for your agency.

Step Six - Present analysis and recommendation to governance or decision-making body

Your presentation should help the Governance or Decision-Making Body determine which solution is the best for your agency. Consider both the content of your presentation and the presentation itself.

Content. The recommended solution should be among the top scoring COTS product in your analysis. Your recommendation should highlight how the product solution selected for your agency addresses the primary needs and concerns of your agency with regard to ERM, as uncovered in Step One.

Since the success of the ERM solution lies with its implementation within your agency, your recommendation should focus on:

- Any successful implementations by others, including any difficulties encountered

- Previous experiences your agency has had with the vendor, if not this specific product

- Projected ease of integrating the system with existing systems and your agency's information infrastructure.

Anything you can recommend that will improve the chances that this product will be successful (i.e., degree of support provided by the vendor for information technology staff; training for users) will factor in to the final purchase decision.

A key decision factor might be your agency's previous investment in one of these products, perhaps as a pilot project or non-enterprise-wide application. An existing relationship, experience working with a vendor, and the fact that an investment may have already been made (i.e., licenses purchased) could make the selection from three relatively equal products easier.

Presentation. Understanding what an audience expects is essential to a successful presentation. Your recommendation is more likely to be accepted when the Governance Body does not have to struggle with the format of the presentation in addition to its content and decision factors are clearly and succinctly presented. Refer to successful product presentations from others in your agency to guide you as you create your recommendation for a COTS solution for enterprise-wide ERM. The importance of an effective ERM governance structure will be addressed in another ERM Initiative guidance document and made available on the NARA website ( http://www.archives.gov/records-mgmt/initiatives/enterprise-erm.html).

Outcome: A COTS solution for your agency-wide ERM initiative.

4. LESSONS LEARNED

EPA sought an independent analysis of its ERDMS plans and evaluation criteria, approaching the Information Technology Resources Board (ITRB) 10 to conduct a review. This section focuses on the lessons learned from the Requirements and Evaluation stages of a selection of a COTS product for ERM at EPA as well as how other federal agencies have determined and set priorities for their ERM requirements. (The knowledge gained during the conduct of a pilot ERM system will be discussed in another guidance within this suite of documents developed under the umbrella of NARA's ERM Initiative.) Based on the information collected, interviews performed, and analysis conducted, the lessons have been grouped into four topic areas: Strategy, Leadership, Organization, and Technology.

TIP: "You can't do ERM if you are not doing RM." If records schedules and file plans are not current, the agency must bring them up-to-date before implementing RMA. Programs are changing regularly and their records management needs change along with the programs. Where agencies have not stayed abreast of their changing program environment, they face a labor-intensive catch-up exercise before they are ready to implement any COTS ERM.

Strategy. By the end of the COTS evaluation, it was evident that EPA had not clearly articulated the challenges being faced by the agency in fulfilling its mission due to inefficient workflow processes and difficulties staff had in locating and retrieving documents/records that should be accessible. The COTS evaluation for ERM had focused more on developing a process for evaluating software rather than on analyzing and streamlining the business processes before seeking a technical solution. The emphasis was placed on repository creation rather than users' ability to access the information they need to do their jobs. Conceptualizing the full process as it exists, understanding the workflow and the importance of content management is crucial to enterprise-wide search and retrieval capabilities.

Technology projects require detailed planning on the structure of the project, scheduling, budgets, implementation, project controls, and a determination of forces that might hinder the project, whether internal to the project/agency or external to it. A framework for excellence focuses on quality and the ability to sustain excellence throughout the lifecycle of the project, from initial discussions through implementation enterprise-wide. Agencies are advised to take a phased approach rather than seek a "total solution" for ERM projects.

In preparing an ERM project plan:

- Clearly define the scope of the project and deliverables

- Establish quantifiable objectives

- Analyze the finances from a cost outlay and benefit (savings) perspective

- Establish appropriate methods for project management and control

- Establish a schedule for major tasks/sub-tasks

- Estimate human resource requirements (numbers of individuals and skill sets required to achieve the desired outcomes)

- Build security for the system and the data from the outset, considering versioning, classification, access by employees and the public, and transmission methodologies

- Establish workable procedures for problem reporting and encourage team members to report potential problems as they uncover them so that these situations can be dealt with early-on.

Aligning ERM performance outcomes with your agency's vision, mission, strategies, and goals, and quantifying benefits derived from ERM to measure success, will provide additional material for the ERM team's communication with management and the rest of the agency as part of celebrating successes, discussion of existing challenges, and plans to overcome them with further improvements. This will reinforce the notion that the ERM project is not finite, but will continue to evolve as the needs of the agency change and functionality is added to the technology solution.

Prepare for other potential costs of an ERM to:

- Ensure compliance with Section 508 of the Rehabilitation Act

- Provide website enablement

- Migrate or integrate data, which may include re-keying data or developing in-house interfaces

- Train users.

Leadership. An enterprise-wide solution will have a better chance for success if there is an executive-level business line championing the project. Motivators are needed, particularly from the ranks of senior management.

Success of an ERM project lies with involvement of a cross-section of individuals throughout the agency, including records managers, users, technologists, and management, and a team that is capable of executing the project plan. Equally important is leadership from within the team and sponsorship from senior management. One key office, or one workflow tool, such as electronic signature, can "sell" the whole concept of ERM.

Leaders must create a vision of what might be-how implementing the proposed technology is likely to affect and improve operations-and communicate that vision to compel people into collective action, motivating and encouraging team members. Using the capital planning process outlined in the E-Gov Guidance for Coordinating the Evaluation of Capital Planning and Investment Control (CPIC) Proposals for ERM Applications ( http://www.archives.gov/records-mgmt/policy/cpic-guidance.html) leaders should review and validate ERM rigorously.

It is the leader who formalizes the governance structure for ongoing collaboration and decision-making specific to the enterprise-wide nature of ERM by including key stakeholders, from throughout the country, programs, and headquarters offices. Options for ERM governance structures will be included in another guidance document to be made available on the NARA website ( http://www.archives.gov/records-mgmt/initiatives/enterprise-erm.html).

Sponsors must present concrete evidence that the changes suggested as a result of the analysis and implementation of ERM are essential for the health and growth of the organization. Typically, they use influence (i.e., personal power or status) to obtain resources for the project and deal with issues beyond the leader's control.

Managers focus on getting the job done efficiently, allocating resources to specific tasks, and maintaining the project's documentation, assuring accountability and traceability. Managers create and maintain an effective working relationship between the ERM project team, users, and vendors. They coach other members of the team about how to improve their performance on the project. For example, it is the manager who is responsible for seeing to it that team members are trained so that they can perform their project tasks within the required timeframes.

All teams require leadership, management, and sponsorship to succeed. While these are distinct functions within the team, they are not mutually exclusive, and leaders must possess management skills and, in particular circumstances, must assume management roles.

Organization. A strategic lesson was learned concerning the importance of reviewing and improving existing business processes during the preliminary steps leading up to assessing requirements for an ERM system. This review allows an agency to validate its current practices and identify areas for change as part of a comprehensive approach to the implementation of an ERM system. While documenting the current business processes, the desired future state should also be described as it may indicate the need for policy changes.

All departments whose work will be affected by these changes will have to be brought on-board and made to appreciate why these changes are necessary. This reinforces the importance of communication about the ERM project throughout the organization and duration of the project, from pre-planning through implementation and on-going training of staff as improvements are made to the system.

An ERM project must develop and implement a marketing and communications plan to publicize ERM, encouraging and enhancing collaborative efforts both inside your agency (between and among departments throughout the organization) and outside (e.g., with vendors). Your communications should describe how ERM supports and facilitates your agency's mission and its business objectives, providing a clear understanding of the scope of the project and its desired outcomes.

Continuous communication with both management and staff throughout the agency concerning ERM project schedules and milestones achieved, including risks involved along the way (as well as the risk of not taking on ERM in a comprehensive manner) will further assure buy-in from management and users alike. These communications and recognition of milestones can take on a number of forms and employ a variety of vehicles. Mechanisms for two-way communication, allowing employees to pose questions concerning the ERM project, will solidify this notion of inclusion.

Identifying challenges to be faced during the course of the project will let others know that the ERM project management team is aware of how ERM will affect the work of their colleagues throughout the agency. This communication will help colleagues appreciate the role of the ERM project team within the agency and its importance to the agency's mission and success strategy.

Key elements to making your ERM communication and marketing effort successful include:

- Emphasizing the benefits for users

- Ensuring that communication is straightforward, timely, and candid

- Formalizing a structured approach to obtaining feedback and incorporating it into the process

- Maintaining consistency of the message about ERM development and deployment

- Continually reinforcing that there is support and commitment from the executive levels of the organization

- Including a public relations effort as part of the strategy

- Acknowledging and addressing valid unresolved issues

- Managing expectations.

Success of any technology project relies on collaboration and teamwork. Adequate staffing is crucial. ERM projects need a staffing strategy that in is line with the agency's practices and project requirements. The focus should be on building a team of players possessing managerial and technical skills, all of whom understand the vision for the project and its desired effect on the agency's operations and who are committed to the success of the project. When building teams, concentrate on the desired results of the project and assemble talents and perspectives that complement each other. This approach will better assure that your ERM project meets its desired outcome.

Even if contractor staff provides ERM technical support, it is imperative that agency staffs ensure technical oversight. Key individuals providing project oversight should have a sufficient blend of both technical and subject matter expertise to effectively manage the ERM system. Key technical positions include:

- Contracting Officer Technical Representative (COTR) providing management and oversight of contractor services

- Technical Lead leading the team in making decisions about technical systems development, providing technical direction for project management and oversight through implementation

- Requirements Manager heading the development of comprehensive requirements for ERM systems, ensuring stakeholder involvement

- Test Manager heading the team in the development and execution of a comprehensive test plan(s), ensuring rigorous testing of ERM solution in the agency's technical environment; manages testing of interfaces with other systems

- Change Management Lead handling ERM solution communication and organizational change efforts for the project throughout the agency to ensure consistent messaging and organizational awareness

- Functional Lead(s) directing all aspects of the systems development effort

- Budget/Cost Analyst assisting in managing costs related to the project

- Quality Assurance (QA) Manager establishing software quality assurance processes and standards to ensure production of quality products that will perform in the agency environment

- Information Security Systems Manager (ISSM) establishing security measures and policies necessary to safeguard the system and the documents contained within the system.

Technology. The overall business need, rather than the technological features of a COTS product, should drive the selection of a vendor partnership. Developing a modular strategy for a total solution that will meet an agency's business needs for records and document management will give the project team more flexibility in phased project development and ERM implementation.

By instituting consistent, enterprise-wide use of metadata (i.e., a standard reference scheme for information on names and associated classification that describes data content, quality, condition, record creator, date and time of creation, associated documents, version number, and other characteristics) and content classification and categorization (taxonomy), the effectiveness of searches (index and text) can be enhanced significantly and make possible searches across multiple collections of materials that are distributed across several repositories. Agencies should ensure that data taxonomy, metadata standards, and a partition in content in relevant collections are developed and implemented for their ERM projects.

TIP: Keep in mind your existing information architecture and requirements, recognizing that changes to it are likely and unavoidable.

Lessons learned from ERM initiatives at other federal agencies include the importance of keeping the development process simple and limited, with phased deployment recommended. Agency experience shows that progress is best made in phased steps rather than all at once. A phased approach will limit the number and magnitude of errors, keeping them manageable. Your ERM team will take what they learn from one phase, and apply it to the next, avoiding unnecessary delays and costs.

A phased approach also helps with evaluation measures, limiting the number of metrics one needs to gather at any one time. The purpose of monitoring and tracking a project's progress is to identify areas in which a change is warranted. The key to a successful evaluation lies in how responsive you are; i.e., how quickly can you identify that a problem exists and put together a plan to correct it.

TIP: Try to integrate the best functional components rather than seeking the perfect product.

Training. Appropriate training of users is another essential lesson for ERM projects, not only on the system, but on basic records management. Staff trained on systems are more comfortable using them and use them more often and more effectively than those who do not receive adequate or timely training. Training should occur while installation is going on so that users can immediately apply what they learned as soon as the ERM system goes live.

Several federal agencies have adopted a policy that all new staff receive records management training. Procedurally, each has implemented the policy in different ways. In one agency, staff cannot get a network password until they have taken a records management training course. In another agency, the first time a new employee attempts to use a function, such as email, the system requires the employee first to complete computer-based training.

Vendors sell training separate from software and customer support (installation and servicing the software on the customer's site). It is important to note that:

- Training is a separate cost item and may be substantial

- Vendor training is expensive and may not be effective for your installation. Vendors can train on their own software applications, but they do not necessarily understand federal records management well, nor the mission and culture of customer agencies.

- Agencies are taking a "train the trainers" approach, sending their own staff to get vendor training on the ERM application and then use their trained staff to train end-users. In this way, they are able to incorporate the specific requirements of their agency's approach into the coursework.

- Agencies deploying ERM to a sizable number of seats are investing in online computer-based training (CBT).

- Agencies are combining approaches. For example, end-users may first attend a group session with trainers, then take the CBT, and then meet again with trainers if problems are encountered.

Agencies that view their ERM as a partnership-with sponsors, senior management, target user groups, information technology departments, and vendors-are likely to have a smoother process from initiation through implementation. Involving people, keeping them informed about the progress being made, and training them to be good records managers will encourage them to use the new ERM system.

5. SUMMARY

There are six basic steps for in the process for selecting COTS software for Enterprise-wide ERM systems:

- Analyze existing requirements

- Develop a manageable set of high-level criteria and scoring guide

- Gather information about each product

- Evaluate COTS against criteria and score each product

- Determine how the top three COTS solutions match your agency's specific requirements

- Present analysis and recommendation to governance or decision-making body.

Critical success factors for conducting comprehensive evaluations of COTS systems for ERM include:

- Involving a cross-section of individuals throughout the agency in the process, keeping them informed of the project's progress along the way. Users and agency management cooperating with information technology (IT) departments (whose system engineers bring the technical know-how in terms of software selection, implementation, integration with existing architecture, and maintenance requirements) and records managers (RM), who understand the principles used in managing collections of documents) provide the 360° required for system selection. The teaming of IT and RM is essential, assuring that the system selected can be modified to best serve the agency.

- Updating records schedules and plans for your agency, adjusting processes to improve workflow prior to implementing ERM. For some agencies, this may mean a shift in thinking from exclusively physical records to incorporating electronic records into the records disposition schedules for the first time. For others, it may be incorporating the records generated by new programs into the agency file plan. Updating records schedules and file plans includes conducting a records inventory-a systematic search for what records the agency has and where they are located. An inventory of systems and processes that generate records will lead to a better understanding of any integration requirements during the COTS evaluation.

- Constructing a cost-benefit analysis or developing a thorough business case that addresses the current costs, volumes, existing systems and processes, enterprise-wide.

- Casting a wide net for gathering information concerning COTS ERM products and vendors. Resources to be consulted include brochures; technical specifications provided by the vendors; white papers provided by the vendors and independent parties; vendor product websites; association websites; articles and case studies; and discussions with other federal agencies using these products and/or working with these vendors.

- Developing a modular strategy for a total solution that will meet you agency's business needs for records and document management, integrating the best functional components rather than seeking a single, perfect product.

- Offering training for all staff on the principles of records and document management, the benefits of an enterprise-wide approach to records management, and the ERM solution itself.

Consider the lessons learned by others who have been involved with ERM projects. Reviewing your own project at the close of each phase, noting things that, upon reflection, you might have done differently will help you as you move to the next phase of this project. Maintaining a record of these "lessons learned" will assist others planning technology projects at your agency. Let others know of the existence and location of those "lessons learned" so that they might access them easily when they are needed.

This document, the third of six documents to be produced under the Enterprise-wide ERM Issue Area, provides guidance on developing agency-specific functional requirements for ERM systems to aid in the evaluation of COTS products. Upcoming documents within the Enterprise-wide ERM Issue Area will consist of guidance for Building an Effective ERM Governance Structure, developing and launching an ERM pilot project, and a "lessons learned" paper from EPA's proof of concept ERM pilot as well as other agencies' implementation experience. All are aimed at helping federal agencies understand the technology and policy issues associated with procuring and deploying an enterprise-wide ERM system.

Glossary

DoD 5015.2-STD 11 - A Department of Defense (DoD) and NARA approved set of minimum functional requirements for ERM applications. It specifies design criteria needed to identify, mark, store, and dispose of electronic records. It does not define how the product is to provide these capabilities. It does not define how an agency manages electronic records or how an ERM program is to be implemented.

E-FOIA - Electronic processing of Freedom of Information Act (FOIA) requests, determination of fee charges and waivers, workflow, redaction, and response.

Electronic Content Management (ECM) 12 - the technologies used to capture, manage, store, and deliver content and documents related to organizational processes; also called Enterprise Content Management.

Electronic Document Management (EDM) 13 - Computerized management of electronic and paper-based documents. EDM includes a system to convert paper documents to electronic form, a mechanism to capture documents from authoring tools, a database to organize the storage of documents, and a search mechanism to locate the documents.

Electronic Records Management (ERM) 14 - Using automated techniques to manage records, regardless of format. It supports records collection, organization, categorization, storage of electronic records, metadata, and location of physical records, retrieval, use, and disposition.

Enterprise and Enterprise-wide - Implementation of a single software application suite throughout all levels and components of an agency or organization. 15

Metadata - A term that describes or specifies characteristics that need to be known about data in order to build information resources such as electronic recordkeeping systems and support records creators and users. 16

Records Management Application (RMA) 17 - A software application to manage records. Its primary management functions are categorizing and locating records and identifying records that are due for disposition. RMA software also stores, retrieves, and disposes of the electronic records that are maintained in its depository. DoD5015.2-STD requires that RMAs be able to manage records regardless of their media.

Appendix A: Overview of Steps Leading up to Evaluation of COTS Requirements

Before you determine your agency's requirements for an enterprise-wide ERM system, you must assess its particular needs for automating the records management process. Include a cross-section of staff representing a diverse set of responsibilities and levels to assure that the system purchased will possess the functionality needed by the agency. Involve representatives from the following groups:

- Document creators

- Records managers

- Users

- Management.

NARA defines ERM as the use of "automated techniques to manage records regardless of format. Electronic records management is the broadest term that refers to electronically managing records on varied formats, be they electronic, paper, microform, etc." 18 For the purpose of this initiative:

- Electronic Records Management (ERM) supports records collection, organization, categorization, storage, metadata capture, physical record tracking, retrieval, use, and disposition. This definition is consistent with NARA's definition, but elaborates further on the functionality generally offered in ERM systems. 19

- Electronic Document Management (EDM) is computerized management of electronic and paper-based documents. It includes a system to convert paper documents to electronic form, a mechanism to capture documents from authoring tools, a database to organize the storage of documents, and a search mechanism to locate the documents. 20

- E-FOIA is electronic processing of Freedom of Information Act (FOIA) requests, determination of charges and waivers, workflow, redaction, and response. E-FOIA functional requirements (such as redaction, cost tracking, and annual reporting) were examined by another group, so EPA COTS evaluation was limited to E-FOIA integration requirements only.

E-FOIA integration requirements may include:

- The ability of ERDMS to transfer objects to E-FOIA or for E-FOIA to point to the ERDMS repository

- The ability of the E-FOIA system to search the ERDMS repository

- The ability of the E-FOIA system to create records in the ERDMS repository (or update retention periods for records, if appropriate)

- Compatibility of the ERDMS architecture and the E-FOIA application architecture. This includes user security mechanisms, encryption techniques, compatible metadata, and object transfer.

Two central issues for the integration of E-FOIA are whether:

1. The requested records are referenced in ERDMS or are copies placed into an E-FOIA repository

2. The records created by the E-FOIA application (e.g., response letter, redacted version of documents, Congressional report) are stored and maintained under file plans in an exclusive E-FOIA repository or in ERDMS.

Other systems installed in your agency, such as a Correspondence Management System used to manage your agency's controlled correspondence, should be included in your enterprise-wide ERM initiative. Often overlooked during the planning process are forms which, when completed, are usually records covered under NARA's General Records Schedules. Forms completed online will likely become federal records, particularly when the data pertain to matters such as regulatory reporting or applications for benefits. As part of an ERM initiative, agencies should bring forms into their planning process.

The foundation of an ERDMS is document management, including version control, workflow, and storage. Once a document or other "object" is designated as a record, the proper retention and disposition is applied. EPA's goal is implement an agency-wide, integrated ERDMS to manage documents and records throughout their lifecycle.

EPA began with a records management application (RMA) 21 workgroup charged with the task of looking at technical requirements for an agency-wide ERM system. A Steering Committee reviewed business processes seeking opportunities for improvement and a Task Force developed an agency-wide strategy for document management. As questions arose, requiring expertise that did not exist among the members of these teams, input was sought from external resources within EPA, other federal agencies, and third-party experts.

EPA's ERM work groups and committees made several assumptions:

System users. Every employee is responsible for managing records. Therefore, each employee needs access and must be trained to use ERM tools.

System focus. The primary focus of the system is documents and records created or received by the agency.

Centralized approach. A centralized system with a single RMA would best meet EPA's requirements.

Migration over integration. While integration with legacy systems was not the primary focus at EPA, the selected product must be able to accommodate legacy systems. Over time, legacy repositories will be migrated to the new system; however, some number of integrations will be required.

Compatible architecture. The selected product must work with EPA's infrastructure standards.

Section 508 requirements. The product must comply with Section 508 of the Rehabilitation Act. This is a statutory requirement that all agencies must consider.

While Web Content Management and Enterprise Search/Portal are related and may be integrated, these issues were addressed separately by EPA Task Force.

Appendix B: Criteria Used by EPA for COTS Evaluation

Appendix C: Resources for the Evaluation of Commercial Off-the-Shelf (COTS) Software

The following documents were referred to by EPA officials as they decided on the requirements for an ERM product to test in a pilot project.

Association for Information and Image Management (AIIM). (1999, April).

Requirements for document management services across the global

business enterprise.

Association for Information and Image Management (AIIM) and American National

Standards Institute (ANSI). (2004). Framework for integration of electronic

document management systems and electronic records management

systems (ANSI/AIIM TR48-2004).

Association of Records Managers and Administrators (ARMA). (1999, December).

DOD 5015 review task force comments to DoD.

DISA, Joint Interoperability Test Command Records Management Application

(RMA) Certification Testing for compliance with DoD 5015.2-STD. Retrieved

July 13, 2005, from http://jitc.fhu.disa.mil/recmgt/index.html

Doculabs 1998 Records Management Benchmark Study outlines the critical

categories that are important to consider when evaluating records

management technologies.

European Union (EU). (2001, March). Model requirements for the management of

electronic records. Retrieved July 13, 2005, from Europa Web site

http://ec.europa.eu/idabc/en/document/2303/5927.html

U.S. Environmental Protection Agency. (2002, January). Electronic Records and

Document Management Functional System Requirements.

U.S. Environmental Protection Agency. (2001, March). National Administrative

Systems Evaluation Study (NASES) Final Report.

U.S. Environmental Protection Agency. (1999, January 26). Response Management

Pilot Project EDM/ERM Functional Requirements Document (Rev. 2.0).

U.S. Environmental Protection Agency, Records Management Application (RMA)

Workgroup. (2001, March 28). Functional Requirements For Electronic

Records Management Software Application, Draft Version, based upon the

Department of Defense's (DOD) 5015.2 requirements for records management

applications with additional EPA requirements added.

1 Electronic Document Management (EDM) is the computerized management of electronic and paper-based documents. It includes a system to convert paper documents to electronic form, a mechanism to capture documents from authoring tools, a database to organize the storage of documents, and a search mechanism to locate the documents.

2 Electronic Records Management (ERM) uses automated techniques to manage records, regardless of format. It supports records collection, organization, categorization, storage of electronic records, metadata, and location of physical records, retrieval, use, and disposition.

3 http://www.archives.gov/records-mgmt/policy/cpic-guidance.html

4 http://www.archives.gov/records-mgmt/policy/requirements-guidance.html

5 National Archives and Records Administration. (2003). Guidance for Coordinating the Evaluation of Capital Planning and Investment Control (CPIC) Proposals for ERM Applications. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/policy/cpic-guidance.html

6 Prior to getting into the details of EPA's experience, it may be helpful to understand a little about the agency. EPA employs 18,000 people across the country, including headquarters offices in Washington, DC, 10 regional offices, and more than a dozen labs. Its staff is highly educated and technically trained; more than half are engineers, scientists, and policy analysts. In addition, a large number of employees are legal, public affairs, financial, information management and computer specialists. EPA has an information-intensive mission, and is heavily involved in public contact, as well as litigation, necessitating timely and thorough retrieval of documents across the agency.

7 See http://www.whitehouse.gov/omb/circulars/a11/current_year/a11_toc.htm

8 See http://www.whitehouse.gov/omb/circulars/a130/a130trans4.pdf

9 Standards and other publications that will help you to develop requirements for your ERM system can be found on many of these organizations' websites: American National Standards Institute ( http://www.ansi.org); Association for Information and Image Management ( http://www.aiim.org); ARMA International ( http://www.arma.org).

10 The Information Technology Review Board (ITRB) is a group of senior IT, acquisition, and program managers with significant experience developing, acquiring, and managing information systems in the Federal Government. Members are drawn from a cross section of agencies and are selected for their specific skills and knowledge. The ITRB provides, at no cost to agencies, peer reviews of major Federal IT systems. Additional information concerning the Information Technology Review Board can be found on the Board's website (http://itrb.gov/).

11 Joint Interoperability Test Command. (n.d.). Records Management Application (RMA) Compliance Testing Program. Retrieved September 15, 2005, from http://jitc.fhu.disa.mil/recmgt/index.html

12 Association for Information and Image Management. (n.d.). Enterprise Content Management (ECM) Definitions. Retrieved September 15, 2005, from http://www.aiim.org/What-is-ECM-Enterprise-Content-Management#

13 National Archives and Records Administration. (2004). Context for Electronic Records. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/initiatives/context-for-erm.html

14National Archives and Records Administration. (2004). Context for Electronic Records. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/initiatives/context-for-erm.html

15 National Archives and Records Administration. (2003). Guidance for Coordinating the Evaluation of Capital Planning and Investment Control (CPIC) Proposals for ERM Applications. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/policy/cpic-guidance.html

16 National Archives and Records Administration. (2004). Context for Electronic Records. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/initiatives/context-for-erm.html

17National Archives and Records Administration. Context for Electronic Records. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/initiatives/context-for-erm.html

18National Archives and Records Administration. (2004). Context for Electronic Records. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/initiatives/context-for-erm.html

19 National Archives and Records Administration. (2003). Guidance for Coordinating the Evaluation of Capital Planning and Investment Control (CPIC) Proposals for ERM Applications. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/policy/cpic-guidance.html

20National Archives and Records Administration. (2004). Context for Electronic Records. Retrieved July 13, 2005, from http://www.archives.gov/records-mgmt/initiatives/context-for-erm.html

21 The primary management functions of Records Management Application (RMA) software are categorizing and locating records and identifying records that are due for disposition. RMA software also stores, retrieves, and disposes of the electronic records that are maintained in its depository.

Page updated: April 26, 2019